- 29 Posts

- 21 Comments

I’m a masochist, so I usually do “New”. Lemmy is small enough that I can usually get through most of the new posts in a reasonable amount of time.

That said, if I want to a bit chiller experience, I will use “Scaled” which sometimes bubbles up something I might have missed.

Finally, I will use “Active” if I’m really bored and what to see what most people are engaged with… but that is pretty rare.

Could be what communities you are subscribed to. I run a small instance with about 3ish users, and here are my stats after about 3 months as well:

9.5G ./pictrs 12G ./postgres 8.0K ./lemmy-uiWhat version of lemmy are you using? A recent update also introduced some space savings in the database (I think).

1·1 year ago

1·1 year agoIt comes down to bridging. I use discord and slack via IRC bridges. I actually use slack a lot (for work), but primarily through irslackd. I do not use slack for anything outside of work and would prefer to keep it that way.

For discord, I primarily use it through bitlbee-discord. With this bridge/gateway, I can actually chat on different servers at the same time, so I wouldn’t mind this for different communities if I had to.

Matrix is last because I don’t really have a good briding solution for it and it just seems clunkier than the other two for me.

3·1 year ago

3·1 year agoI would be less willing to contribute/participate in discussions if newer platforms such as discord, slack, or matrix are used. Of those three, I would prefer discord, then slack, then matrix.

As it is, I only use Slack for work, and mostly avoid discord and matrix except for a few mostly dead channels/servers.

I understand that this is not the mainstream view and that most people prefer the newer platforms, but personally, I am not a fan of them nor do I use them.

2·1 year ago

2·1 year agoI’m fine with IRC (actually prefer it as I use it all the time).

I agree with others that a mailing list is more intimidating and more of a hassle, but if there is a web archive, I can live with that. It wouldn’t be my preference, but it wouldn’t be an insurmountable barrier (I have contributed to Alpine Linux in the past via their mailing list workflow).

29·1 year ago

29·1 year agoI think this is the author being humble.

jmmvis a long time NetBSD and FreeBSD contributor (tmpfs, ATF, pkg_comp), has worked as a SRE at Google, and has been a developer on projects such as Bazel (build infrastructure). They probably know a thing or two about performance.Regarding the overall point of the blog, I agree with

jmmv. Big O is a measure of efficiency at scale, not a measure of performance.As someone who teaches Data Structures and Systems Programming courses, I demonstrate this to students early on by showing them multiple solutions to a problem such as how to detect duplicates in a stream of input. After analyzing the time and space complexities of the different solutions, we run it the programs and measure the time. It turns out that the O(nlogn) version using sorting can beat out the O(n) version due to cache locality and how memory actually works.

Big O is a useful tool, but it doesn’t directly translate to performance. Understanding how systems work is a lot more useful and important if you really care about optimization and performance.

9·1 year ago

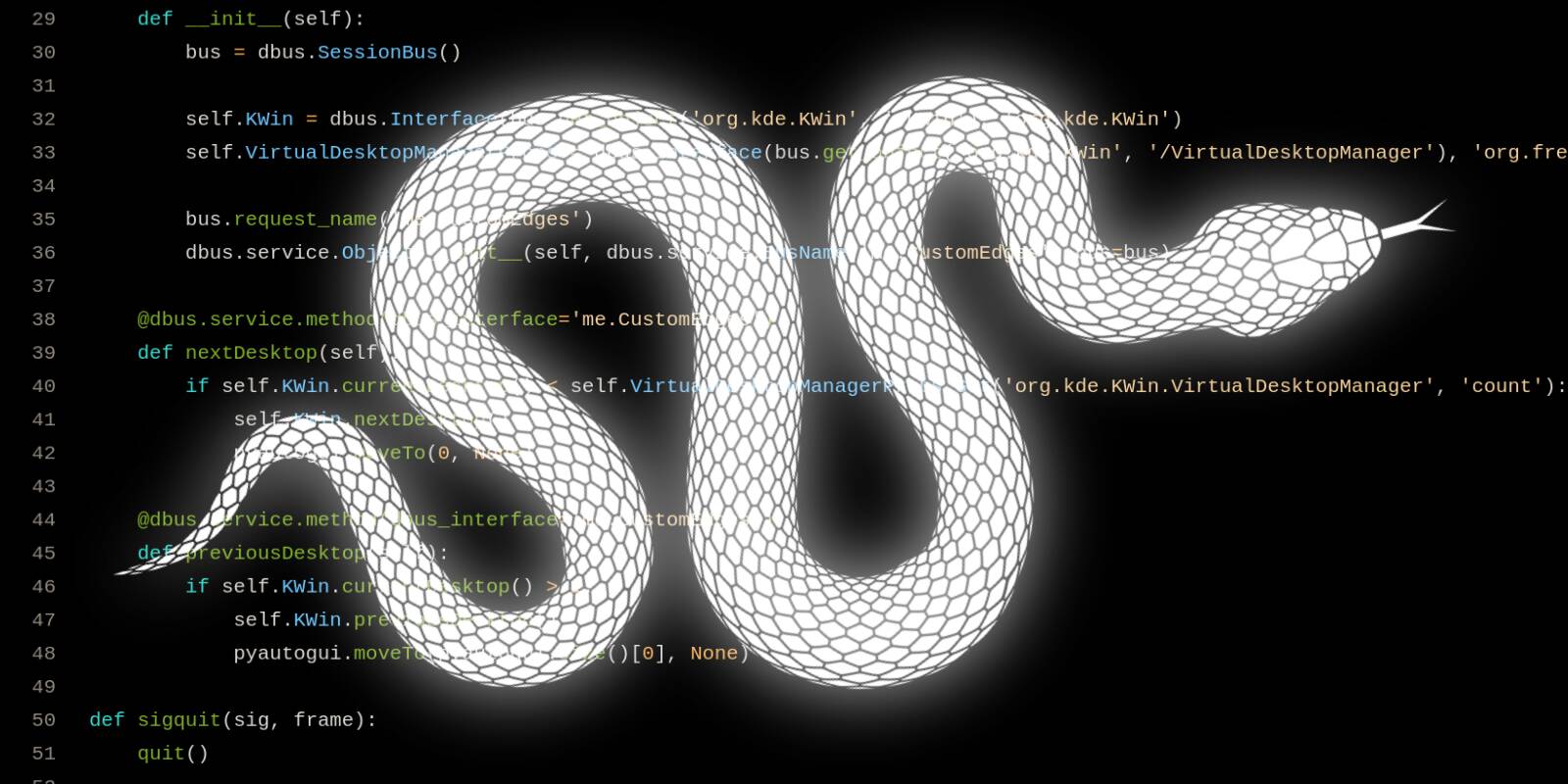

9·1 year agoContributing immortal objects into Python introduces true immutability guarantees for the first time ever. It helps objects bypass both reference counts and garbage collection checks. This means that we can now share immortal objects across threads without requiring the GIL to provide thread safety.

This is actually really cool. In general, if you can make things immutable or avoid state, then that will help you structure things concurrently. With immortal objects you now can guarantee that immutability without costly locks. It will be interesting to see what the final round of benchmarks are when this is fully implemented.

121·1 year ago

121·1 year agoFamiliarity (my client distro is Pop and is based on Ubuntu), and I like the LTS life cycle (predictable).

I do uninstall snaps, though, and mostly just use Docker for things. I could use Debian, but again, for me it was about familiarity and support (a lot more Ubuntu specific documentation).

5·1 year ago

5·1 year agoYou can escape the

:URLS = https\://foo.example.com URLS += https\://bar.example.com URLS += https\://www.example.org

3·1 year ago

3·1 year agoDo you have a

searxngfolder in the same folder as yourdocker-compose.yml? If so, perhaps it is not mounting inside the container properly.

2·1 year ago

2·1 year agoInteresting… I’ve only ever done

python3 -m cProfile script.pywhen I’ve had to profile some code.

6·1 year ago

6·1 year agoNo, but basically jmp.chat takes over your phone number… it acts as your carrier for voice and SMS (similar to Google Voice). Maybe not exactly what you want.

From the FAQ:

You can use JMP to communicate with your contacts without them changing anything on their end, just like with any other telephone provider. JMP works wherever you have an Internet connection. JMP can be used alongside, or instead of, a traditional wireless carrier subscription.

The benefit of this is that you can receive voice and text on anything that can serve as a XMPP client.

12·1 year ago

12·1 year agoYou could consider using something like jmp.chat. It delivers SMS via XMPP (aka jabber), so you could self-host a XMPP server and receive SMS that way. It also has some support for MMS (group chat, media), but my experience with it was mixed (I used it for about 3-4 years).

7·1 year ago

7·1 year agoIndeed… :|

211·1 year ago

211·1 year agoThis looks incredibly cool and fun. Would be interested trying to re-write some of the games myself when I have some free time.

3·1 year ago

3·1 year agoI sometimes use

gdbwith C and C++, but never really usepdbwith Python… mostly stick with logging (ok,printstatements). This is good information to know though, and I should probably get better at using a debugger with Python.

12·1 year ago

12·1 year agoIt will depend on the nature of how the threaded code is structured (how much is sequential, how much is paralle, Amdahl’s law, etc), but it should at least be more effective at scaling up and taking advantage of multiple cores.

That said, the change would come at a cost to single threaded code. From the PEP 703:

The changes proposed in the PEP will increase execution overhead for --disable-gil builds compared to Python builds with the GIL. In other words, it will have slower single-threaded performance. There are some possible optimizations to reduce execution overhead, especially for --disable-gil builds that only use a single thread. These may be worthwhile if a longer term goal is to have a single build mode, but the choice of optimizations and their trade-offs remain an open issue.

13·1 year ago

13·1 year agoPretty exciting… I just hope they are able to effectively avoid another long transition ala python2 -> python3.

Oh. I’m sorry if this was discussed previously… I only returned to lemmy a few weeks ago and didn’t see the story covered yet.